Data Store

The simplest use case of Kinvey is storing and retrieving data to and from your cloud backend.

The basic unit of data is an entity and entities of the same kind are organized in collections. An entity is a set of key-value pairs which are stored in the backend in JSON format. Kinvey's libraries automatically translate your native objects to JSON.

Kinvey's data store provides simple CRUD operations on data, as well as powerful filtering and aggregation.

Collections

To start working with a collection, you need to instantiate a DataStore in your application. Each DataStore represents a collection on your backend.

//we use the example of a "Book" datastore

let dataStore = try DataStore<Book>.collection()Through the remainder of this guide, we will refer to the instance we just retrieved as dataStore.

Optionally, you can configure the DataStore type when creating it. To understand the types of data stores and when to use each type, refer to the Data Store Types section.

Data Store Types

Cache store type has been deprecated, and will be removed from the SDK entirely as early as December 1, 2019. If you are using the Cache store, please make changes to move to a different store type. The Auto store type has been introduced as a replacement for the Cache store.

When you get an instance of a data store in your application, you can optionally select a data store type. The type has to do with how the library handles intermittent or prolonged interruptions in connectivity to the backend.

Choose a type that most closely resembles your data requirements.

- DataStoreType.Sync: You want a copy of some (or all) the data from your backend to be available locally on the device and you like to sync it periodically with the backend.

- DataStoreType.Auto: You want to read and write data primarily from the backend, but want to be more robust against varying network conditions and short periods of network loss.

- DataStoreType.Network: You want the data in your backend to never be stored locally on the device, even if it means that the app will not offer offline use.

- DataStoreType.Cache: Deprecated You want data stored on the device to optimize your app's performance and/or provide offline support for short periods of network loss. This is the default data store type if a type is not explicitly set.

Head to the Specify Data Store Type section to learn how to request the data store type that you selected.

Entities

The Kinvey service has the concept of entities, which represent a single resource.

Model

In the Kinvey iOS library, an entity is represented by a subclass of the Entity class.

import Kinvey

class Book : Entity, Codable {

@objc dynamic var title: String?

@objc dynamic var authorName: String?

@objc dynamic var publishDate: Date?

override class func collectionName() -> String {

// Return the name of the backend collection

// corresponding to this entity.

return "Book"

}

// Map properties in your backend collection to the members of this entity

enum CodingKeys : String, CodingKey {

case title

case authorName = "author"

case publishDate = "date"

}

// Swift.Decodable

required init(from decoder: Decoder) throws {

// This maps the "_id", "_kmd" and "_acl" properties

try super.init(from: decoder)

let container = try decoder.container(keyedBy: CodingKeys.self)

// Each property in your entity should be mapped

// using the following scheme:

// <#property#> = try container.decodeIfPresent(

// <#property type#>.self,

// forKey: .<#CodingKey property name#>

// )

title = try container.decodeIfPresent(String.self, forKey: .title)

authorName = try container.decodeIfPresent(String.self, forKey: .authorName)

// This maps the backend property "date" to the

// member variable "publishDate" using a ISO 8601 date transform.

publishDate = try container.decodeIfPresent(String.self, forKey: .publishDate)?.toDate()

}

// Swift.Encodable

override func encode(to encoder: Encoder) throws {

// This maps the "_id", "_kmd" and "_acl" properties

try super.encode(to: encoder)

var container = encoder.container(keyedBy: CodingKeys.self)

// Each property in your entity should be mapped

// using the following scheme:

// try container.encodeIfPresent(

// <#property#>,

// forKey: .<#CodingKey property name#>

// )

try container.encodeIfPresent(title, forKey: .title)

try container.encodeIfPresent(authorName, forKey: .authorName)

// This maps the backend property "date" to the

// member variable "publishDate" using a ISO 8601 date transform.

try container.encodeIfPresent(publishDate?.toISO8601(), forKey: .publishDate)

}

/// Default Constructor

required init() {

super.init()

}

/// Realm Constructor

required init(realm: RLMRealm, schema: RLMObjectSchema) {

super.init(realm: realm, schema: schema)

}

/// Realm Constructor

required init(value: Any, schema: RLMSchema) {

super.init(value: value, schema: schema)

}

@available(*, deprecated, message: "Please use Swift.Codable instead")

required init?(map: Map) {

super.init(map: map)

}

}Supported Types

The following property types are supported by the library:

| Swift Type | JSON Type | Notes |

|---|---|---|

String | string | |

Int, Float, Double | number | Variations of Int, such asInt8, Int16, Int32, Int64, are also supported |

Bool | boolean | |

Date | string | Kinvey uses the ISO8601 date format. Use KinveyDateTransform to map date objects |

GeoPoint | array | Refer to the location guide to understand how to persist geolocation information |

The library also provides a way to represent lists of each supported primitive type, as shown below:

| Swift Type | Sample code |

|---|---|

String | let authorNames = List<StringValue>() |

Int | let editionsYear = List<IntValue>() |

Float | let editionsRetailPrice = List<FloatValue>() |

Double | let editionsRating = List<DoubleValue>() |

Bool | let editionsAvailable = List<BoolValue>() |

T | let authors = List<Author> |

List<T>types must be unmutable using the keywordletinstead of the usual declaration@objc dynamic varFor

List<T>to work with a custom object type such asAuthor, the type must adhere to the guidelines listed in the custom types section.

Custom Types

This section describes the guidelines to persist a type that is not covered in Kinvey's supported types, including any types that you define in your app.

Enabling your Type to be Cached

The Kinvey iOS SDK uses Realm for persisting data locally in cache. Caching for all the Kinvey-supported types is handled automatically by the SDK. For custom types, you need to extend the Realm Object class to declare that your type should be cached.

When you subclass Object, all the properties of your custom type are enabled for caching, as long as they are in the Realm supported types. If you need help defining the properties of your type, take a look at this cheatsheet.

Mapping Your Type to JSON

The recommended way to map JSON from the backend to entities inside the app is through the use of the Swift Codable protocol. The Object Mapper approach, documented at the bottom, is deprecated.

class Person : Entity, Codable {

@objc dynamic var name: String?

@objc dynamic var address: Address?

override class func collectionName() -> String {

//name of the collection in your Kinvey console

return "Person"

}

}

class Address : Object, Codable {

@objc dynamic var city: String?

@objc dynamic var state: String?

@objc dynamic var country: String?

}If the backend property names differ from the property names in your class, you can create a CodingKey enumeration as the Codable protocol suggests. For example, you have to create an enumeration if the property name on the backend is Name (uppercase "N") but the property name in the class is name (lowercase "N").

class Person : Entity, Codable {

@objc dynamic var name: String?

@objc dynamic var address: Address?

override class func collectionName() -> String {

//name of the collection in your Kinvey console

return "Person"

}

enum CodingKeys: String, CodingKey {

case name = "Name"

case address = "Address"

}

}If you need extra flexibility, you can implement the Decodable and/or Encodable protocol methods. Go to the official Swift Codable protocol documentation for sample code.

The usage of ObjectMapper, documented below, is deprecated and will be removed in a future SDK version. We now recommend using the standard Swift Codable protocol.

To persist your custom type to the backend, you need to tell Kinvey how to map your object to and from JSON. ObjectMapper provides you with two ways to do this:

- Implement

Mappable: If your custom type is a class with properties that easily map to Kinvey-supported types, we recommend to extend theMappableprotocol.

class Person : Entity {

@objc dynamic var name: String?

@objc dynamic var address: Address?

override class func collectionName() -> String {

//name of the collection in your Kinvey console

return "Person"

}

@available(*, deprecated, message: "Please use Swift.Codable instead")

override func propertyMapping(_ map: Map) {

super.propertyMapping(map)

name <- ("name", map["name"])

address <- ("address", map["address"])

}

}

class Address : Object {

@objc dynamic var city: String?

@objc dynamic var state: String?

@objc dynamic var country: String?

@available(*, deprecated, message: "Please use Swift.Codable instead")

func mapping(map: Map) {

city <- ("city", map["city"])

state <- ("state", map["state"])

country <- ("country", map["country"])

}

}- Implement a custom transform: Custom Transforms provides a flexible way to map complex types. Your transform programmatically defines the mapping of your custom type to JSON.

class Person: Entity {

@objc dynamic var name: String?

// Here the Person has an imageURL of type URL,

// which is not one of the supported Types.

// To allow the imageURL to be persisted in the cache,

// we use a computed variable of type String.

@objc private dynamic var imageURLValue: String?

var imageURL: URL? {

get {

if let urlValue = imageURLValue {

return URL(string: urlValue)

}

return nil

}

set {

imageURLValue = newValue?.absoluteString

}

}

override class func collectionName() -> String {

return "Person"

}

@available(*, deprecated, message: "Please use Swift.Codable instead")

override func propertyMapping(_ map: Map) {

super.propertyMapping(map)

name <- ("name", map["name"])

imageURL <- ("imageURL", map["imageURL"], URLTransform())

}

}

// This transform allows the URL type to be mapped

// to JSON in the Kinvey backend.

@available(*, deprecated, message: "Please use Swift.Codable instead")

class URLTransform: TransformType {

public typealias Object = URL

public typealias JSON = String

open func transformFromJSON(_ value: Any?) -> URL? {

guard let urlString = value as? String else {

return nil

}

return URL(string: urlString)

}

open func transformToJSON(_ value: URL?) -> String? {

return value?.absoluteString

}

}Fetching

You can retrieve entities by either looking them up using an ID, or by querying a collection.

To overcome these limitations, use paging.

Note that for Sync, Auto, and Cache stores the entity limit does not apply if the result is entirely returned from the local cache.

Fetching by Id

To fetch an (one) entity by id, call dataStore.findById.

let id = "<#entity id#>"

dataStore.find(id, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}Fetching by Query

To fetch all entities in a collection, call dataStore.find.

dataStore.find() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Book: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}To fetch multiple entities using a query, call dataStore.find and pass in a query.

let query = Query(format: "title == %@", "The Hobbit")

dataStore.find(query) { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Book: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}It's worth noting that an empty array is returned when the query matches zero entities.

Saving

You can save an entity by calling dataStore.save.

dataStore.save(book, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}The save method acts as upsert. The library uses the _id property of the entity to distinguish between updates and inserts.

If the entity has an

_id, the library treats it as an update to an existing entity.If the entity does not have an

_id, the library treats it as a new entity. The Kinvey backend assigns an automatic_idfor a new entity.

_id, you should avoid values that are exactly 12 or 24 symbols in length. Such values are automatically converted to BSON ObjectID and are not stored as strings in the database, which can interfere with querying and other operations.

To ensure that no items with 12 or 24 symbols long _id are stored in the collection, you can create a pre-save hook that either prevents saving such items, or appends an additional symbol (for example, underscore) to the _id:

if (_id.length === 12 || _id.length === 24) {

_id += "_";

}Creating Multiple Entities at Once

To create multiple entities at once, call dataStore.save and pass in an array containing the entities that you want to create:

dataStore.save(books, options: nil) {

switch $0 {

case .success(let result):

let entities = result.entities

let errors = result.errors

entities.forEach {

print($0)

}

errors.forEach {

print($0)

}

case .failure(let error):

print(error)

}

}

}The response is comprised of an entities array that shows the items which were created successfully and an errors array which appears when some items failed to be created. Here is an example response for a request that attempts to create two entities but succeeds only for one of them:

{

"entities": [

{

"_id": "entity-id",

"field": "value1",

"_acl": {

"creator": "5ef49b7576723200150be295"

},

"_kmd": {

"lmt": "2020-07-09T16:16:37.597Z",

"ect": "2020-07-09T16:16:37.597Z"

}

},

null

],

"errors": [

{

"error": "KinveyInternalErrorRetry",

"description": "The Kinvey server encountered an unexpected error. Please retry your request.",

"debug": "An entity with that _id already exists in this collection",

"index": 1

}

]

}Deleting

To delete an entity, call dataStore.removeById and pass in the entity _id.

let id = "<#entity id#>"

dataStore.remove(byId: id, options: nil) { (result: Result<Int, Swift.Error>) in

switch result {

case .success(let count):

print("Count: \(count)")

case .failure(let error):

print("Error: \(error)")

}

}Deleting Multiple Entities at Once

To delete multiple entities at once, call dataStore.remove. Optionally, you can pass in a query to only delete entities matching the query.

let query = Query(format: "title == %@", "The Hobbit")

dataStore.remove(query, options: nil) { (result: Result<Int, Swift.Error>) in

switch result {

case .success(let count):

print("Count: \(count)")

case .failure(let error):

print("Error: \(error)")

}

}Querying

Queries to the backend are represented as instances of Query. Queries have the ability to gather filter statements using NSPredicate and order statements using NSSortDescriptor. These queries are used with DataStore methods.

Query provides a number of factory methods to query-creating convenience.

The default constructor makes a query that matches all values in a collection.

let query = Query()Query instances can be created the same way that NSPredicate instances are created. For instance, the code below show how to find all entities that match the exactly supplied value.

let query = Query(format: "location == %@", "Mike's House")Query(format: "field = nil") creates a query that matches entities where the specified field is empty or unset. This will not match fields with the value null.

For a complete guide of NSPredicate, please check the Predicate Programming Guide

Operators

In addition to exact match and null queries, you can query based upon an several types of expressions: comparison, set match, string and array operations.

Conditional queries are built in Query.

Comparison operators

=,==The left-hand expression is equal to the right-hand expression.>=,=>The left-hand expression is greater than or equal to the right-hand expression.<=,=<The left-hand expression is less than or equal to the right-hand expression.>The left-hand expression is greater than the right-hand expression.<The left-hand expression is less than the right-hand expression.!=,<>The left-hand expression is not equal to the right-hand expression.BETWEENThe left-hand expression is between, or equal to either of, the values specified in the right-hand side. The right-hand side is a two value array (an array is required to specify order) giving upper and lower bounds. For example,1 BETWEEN { 0 , 33 }, or$INPUT BETWEEN { $LOWER, $UPPER }.

For a complete guide of NSPredicate Syntax, please check the Predicate Format String Syntax

Modifiers

Queries can also have optional modifiers to control how the results are presented. Query allows for limit & skip modifiers and sorting.

Limit and Skip Modifiers

Limit and Skip allow for paging of results. For example, if there are 100 possible results, you can break them up into pages of 20 items by setting the limit to 20, and then incrementing the skip modifier by 0, 20, 40, 60, and 80 in separate queries to eventually retrieve them all.

let pageOne = Query {

$0.skip = 0

$0.limit = 20

}

let pageTwo = Query {

$0.skip = 20

$0.limit = 20

}

// ...limit > 10,000 in the example above, the backend will silently limit the results to only the first 10,000 entities. For this reason, we strongly recommend fetching your data in pages.

Note that for Auto, Sync, and Cache stores the entity limit does not apply if the result is entirely returned from the local cache.

Sort

Query results can also be sorted by adding sort modifiers. Queries are sorted by field values in ascending or descending order, by using NSSortDescriptors.

For example,

let predicate = NSPredicate(format: "name == %@", "Ronald")

let sortDescriptor = NSSortDescriptor(key: "date", ascending: true)

let query = Query(predicate: predicate, sortDescriptors: [sortDescriptor])To sort by a field and then sub-sort those results by another field, just keep adding additional sort modifiers.

Compound Queries

You can make expressive queries by stringing together several queries. For example, you can query for dates in a range or events at a particular day and place.

NSCompoundPredicate can be used to create a query that is a combination of conditionals. For example, the following code uses a NSCompoundPredicate to query for cities with 2 different filter statements combined by an AND operator.

//Search for cities in Massachusetts with a population bigger than 50k.

let query = Query(format: "state == %@ AND population >= %@", "MA", 50000)The code below does the same thing, but using NSCompoundPredicate.

let predicateState = NSPredicate(format: "state == %@", "MA")

let predicatePopulation = NSPredicate(format: "population >= %@", 50000)

let predicate = NSCompoundPredicate(andPredicateWithSubpredicates: [predicateState, predicatePopulation])

let query = Query(predicate: predicate)Special Keys

The following pre-defined constant keys map to Kinvey-specific fields. These can be used in querying and sorting.

Entity.Key.entityIdthe object idEntity.Key.aclthe ACL object which containts the creator of the objectEntity.Key.metadatathe metadata object which contains the entity create time and last modification time

Reduce Functions

The SDK defines the following reduce functions:

@count—counts all elements in the group@sum:—sums together the numeric values for the input field@min:—finds the minimum of the numeric values of the input field@max:—finds the maximum of the numeric values of the input field@avg:—finds the average of the numeric values of the input field

Counting

To count the number of objects in a collection, use the @count keyword.

let query = Query(format: "children.@count == 2")To count the number of entities in a collection you should use DataStore.count()

dataStore.count(options: nil) { (result: Result<Int, Swift.Error>) in

switch result {

case .success(let count):

print("Count: \(count)")

case .failure(let error):

print("Error: \(error)")

}

}You can also pass an optional query parameter to DataStore.count() in order to perform a count operation in a specific query:

// let query: Query = <#Query(format: "myProperty == %@", myValue)#>

dataStore.count(query, options: nil) { (result: Result<Int, Swift.Error>) in

switch result {

case .success(let count):

print("Count: \(count)")

case .failure(let error):

print("Error: \(error)")

}

}Aggregation/Grouping

DataStore.group() is a overloaded method that allows you to do grouping and aggregation against your data in the backend. You can write your own reduce function using JavaScript or use pre-defined functions like:

- Count

- Sum

- Average (avg)

- Min (minimum)

- Max (maximum)

For example:

dataStore.group(keys: ["country"], avg: "age", avgType: Int.self, options: nil) { (result: Result<[AggregationAvgResult<Book, Int>], Swift.Error>) in

switch result {

case .success(let array):

print(array)

case .failure(let error):

print(error)

}

}If you would like to see additional functions, email support@kinvey.com.

Setting Custom Options

Whenever you need to set a different option value other then the default value for a single request, a collection instance, or a Kinvey instance, you can set it in the Options structure. Although the SDK allows you to set some of the options separately, setting them through the structure is more convenient and tidy in most cases.

To see the complete list of values defined by the structure, check the API Reference.

All properties in the Options structure are optional. The value of nil is assumed for a property that you don't set explicitly, which is the default value.

To set options, first instantiate the Options structure and then pass the instance to the request or collection or Kinvey instance. You can create as many Options instances as you need and set their options differently.

let dataStore = try DataStore<Book>.collection()

let query = Query(format: "title == %@", "The Hobbit")

let options = try Options(readPolicy: .forceNetwork, timeout: 120)

dataStore.find(query, options: options) {

switch $0 {

case .success(let books):

print(books)

case .failure(let error):

print(error)

}

}Caching and Offline

A key aspect of good mobile apps is their ability to render responsive UIs, even under conditions of poor or missing network connectivity. The Kinvey library provides caching and offline capabilities for you to easily manage data access and synchronization between the device and the backend.

Kinvey's DataStore provides configuration options to solve common caching and offline requirements. If you need better control, you can utilize the options described in Granular Control.

Specify Data Store Type

When initializing a new DataStore to work with a Kinvey collection, you can optionally specify the DataStore type. The type determines how the SDK handles data reads and writes in full or intermittent absence of connection to the app backend.

In this section:

Sync

Configuring your data store as Sync allows you to pull a copy of your data to the device and work with it completely offline. The library provides APIs to synchronize local data with the backend.

This type of data store is ideal for apps that need to work for long periods without a network connection.

Here is how you should use a Sync store:

// Get an instance

let dataStore = try DataStore<Book>.collection(.sync)

// Pull data from your backend and save it locally on the device.

dataStore.pull() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// Find data locally on the device.

dataStore.find() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// Save an entity locally on the device. This will add the item to the

// sync table to be pushed to your backend at a later time.

let book = Book()

dataStore.save(book, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}

// Sync local data with the backend

// This will push data that is saved locally on the device to your backend;

// and then pull any new data on the backend and save it locally.

dataStore.sync() { (result: Result<(UInt, AnyRandomAccessCollection<Book>), [Swift.Error]>) in

switch result {

case .success(let count, let books):

print("\(count) Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}The Pull, Push, Sync, and Sync Count APIs allow you to synchronize data between the application and the backend.

Auto

Configuring your data store as Auto allows it to primarily work directly with the backend. However, in cases of short network connection interruptions, the library utilizes a local data store to return any results available on the device. Results from previous read requests to the network are stored on the local device with this store type, which is what enables the ability to retrieve results locally when the network cannot be reached.

When your app makes a save request during a network outage, this store type saves the data locally and creates a "pending writes queue" entry to represent the save. You can use the Push operation as soon as the network connection resumes to save the data in the data store.

The following example shows how to use an Auto store:

// Get an instance

let dataStore = try DataStore<Book>.collection(.auto)

// The completion handler will return the data retrieved from

// the network unless the network is unavailable, in which case

// the cached data stored locally will be returned.

dataStore.find() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// Save an entity. The entity is saved to the device and then to your backend.

// In the lack of a network connection, the entity is stored locally.

// You will need to push it to the server when network reconnects,

// using dataStore.push().

let book = Book()

dataStore.save(book, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}You can think of this data store as a more robust Network data store, where local storage is used to provide more continuity when the network is temporarily unavailable.

Cache

Cache store type has been deprecated, and will be removed from the SDK entirely as early as December 1, 2019. If you are using the Cache store, please make changes to move to a different store type. The Auto store type has been introduced as a replacement for the Cache store.

Configuring your data store as Cache allows you to use the performance optimizations provided by the library. In addition, the cache allows you to work with data when the device goes offline.

The Cache mode is the default mode for the data store. Most of the time you don't need to set it explicitly.

This type of data store is ideal for apps that are generally used with an active network, but may experience short periods of network loss.

Here is how you should use a Cache store:

// Get an instance

let dataStore = try DataStore<Book>.collection(.cache)

// The completion handler block will be called twice,

// #1 call: will return the cached data stored locally

// #2 call: will return the data retrieved from the network

dataStore.find() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// Save an entity. The entity is saved to the device and your backend.

// In the lack of a network connection, the entity is stored locally.

// You will need to push it to the server when network reconnects,

// using dataStore.push().

let book = Book()

dataStore.save(book, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}The Cache data store executes all CRUD requests against local storage as well as the backend. Any data retrieved from the backend is stored in the cache. This allows the app to work offline by fetching data that has been cached from past usage.

The Cache data store also stores pending write operations when the app is offline. However, the developer is required to push these pending operations to the backend when the network resumes. The Push API should be used to accomplish this.

The Pull, Push, Sync, and Sync Count APIs allow you to synchronize data between the application and the backend.

Network

Configuring your data store as Network turns off all caching in the library. All requests to fetch and save data are sent to the backend.

We don't recommend this type of data store for apps in production, since the app will not work without network connectivity. However, it may be useful in a development scenario to validate backend data without a device cache.

Here is how you should use a Network store:

// Get an instance

let dataStore = try DataStore<Book>.collection(.network)

// Retrieve data from your backend

dataStore.find() { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// Save an entity to your backend.

let book = Book()

dataStore.save(book, options: nil) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print("Book: \(book)")

case .failure(let error):

print("Error: \(error)")

}

}Pull Operation

Calling pull() retrieves data from the backend and stores it locally in the cache.

By default, pulling retrieves the entire collection to the device. Optionally, you can provide a query parameter to pull to restrict what entities are retrieved. If you prefer to only retrieve the changes since your last pull operation, you should enable Delta Sync.

The pull API needs a network connection in order to succeed.

let dataStore = try DataStore<Book>.collection(.sync)

//In this example, we pull all the data in the collection from the backend

//to the Sync Store

dataStore.pull { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}If your Sync Store has pending local changes, they must be pushed to the backend before pulling data to the store.

Push Operation

Calling push() kicks off a uni-directional push of data from the library to the backend.

The library goes through the following steps to push entities modified in local storage to the backend:

Reads from the "pending writes queue" to determine what entities have been changed locally. The "pending writes queue" maintains a reference for each entity in local storage that has been modified by the app. For an entity that gets modified multiple times in local storage, the queue only references the last modification on the entity.

Creates a REST API request for each pending change in the queue. The type of request depends on the type of modification that was performed locally on the entity.

- If an entity is newly created, the library builds a

POSTrequest. - If an entity is modified, the library builds a

PUTrequest. - If an entity is deleted, the library builds a

DELETErequest.

- If an entity is newly created, the library builds a

Makes the REST API requests against the backend concurrently. Requests are batched to avoid hitting platform limits on the number of open network requests.

- For each successful request, the corresponding reference in the queue is removed.

- For each failed request, the corresponding reference remains persisted in the queue. The library adds information in the

push/syncresponse to indicate that a failure occurred. Push failures are discussed in Handling Push Failures.

Returns a response to the application indicating the count of entities that were successfully synced and a list of errors for entities that failed to sync.

let dataStore = try DataStore<Book>.collection(.sync)

//In this example, we push all local data in this datastore to the backend

//No data is retrieved from the backend.

dataStore.push(options: nil) { (result: Result<UInt, [Swift.Error]>) in

switch result {

case .success(let count):

print("Count: \(count)")

case .failure(let error):

print("Error: \(error)")

}

}Handling Push Failures

The push response contains information about the entities that failed to push to the backend. For each failed entity, the corresponding reference in the pending writes queue is retained. This is to prevent any data loss during the push operation. Consider these options for handling failures:

- Retry pushing your changes at a later time. You can simply call

pushagain on the data store to attempt again. - Ignore the failed changes. You can call

purgeon the data store, which will remove all pending writes from the queue. The failed entity remains in your local cache, but the library will not attempt to push it again to the backend. - Destroy the local cache. You call

clearon the data store, which destroys the local cache for the store. You will need to pull fresh data from the backend to start using the cache again.

Each of the APIs mentioned in this section are described in more detail in the data store API reference.

Sync Operation

Calling sync() kicks off a bi-directional synchronization of data between the library and the backend. First, the library calls push to send local changes to the backend. Subsequently, the library calls pull to fetch data in the collection from the backend and stores it on the device.

You can provide a query as a parameter to the sync API to restrict the data that is pulled from the backend. The query does not affect what data gets pushed to the backend.

If you prefer to only retrieve the changes since your last sync operation, you should enable Delta Sync.

let dataStore = try DataStore<Book>.collection(.sync)

// In this sample, we push all local data for this datastore to the backend

// and then pull the entire collection from the backend to the local storage.

dataStore.sync() { (result: Result<(UInt, AnyRandomAccessCollection<Book>), [Swift.Error]>) in

switch result {

case .success(let count, let books):

print("\(count) Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}

// In this example, we restrict the data that is pulled from the backend

// to the local storage by specifying a query in the sync API.

let query = Query()

dataStore.sync(query) { (result: Result<(UInt, AnyRandomAccessCollection<Book>), [Swift.Error]>) in

switch result {

case .success(let count, let books):

print("\(count) Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}Sync Count Operation

You can retrieve a count of entities modified locally and pending a push to the backend.

// Number of entities modified offline.

let count = dataStore.syncCount()Granular Control

Selecting a DataStoreType is usually sufficient to solve the caching and offline needs of most apps. However, should you desire more control over how data is managed in your app, you can use the granular configuration options provided by the library. The following sections discuss the advanced options available on the DataStore.

In this section:

Data Reads

The way to control Data Reads is to set a ReadPolicy.

Read Policy

ReadPolicy controls how the library fetches data. When you select a store configuration, the library sets the appropriate ReadPolicy on the store. However, individual reads can override the default read policy of the store by specifying a ReadPolicy.

The following read policies are available:

ForceLocal- forces the datastore to read data from local storage. If no valid data is found in local storage, the request fails.ForceNetwork- forces the datastore to read data from the backend. If network is unavailable, the request fails.Both- reads first from the local cache and then attempts to get data from the backend.

Examples

Assume that you are using a datastore with caching enabled (the default), but want to force a certain find request to fetch data from the backend. This can be achieved by specifying a ReadPolicy when you call the find API on your store.

//create a Sync store

let dataStore = try DataStore<Book>.collection(.sync)

//force the datastore to request data from the backend

dataStore.find { (result: Result<AnyRandomAccessCollection<Book>, Swift.Error>) in

switch result {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}A tidier approach is to set readPolicy through the Options structure.

let dataStore = try DataStore<Book>.collection(.cache)

let options = try Options(readPolicy: .forceNetwork)

dataStore.find(query, options: options) {

switch $0 {

case .success(let books):

print("Books: \(books)")

case .failure(let error):

print("Error: \(error)")

}

}Data Writes

Write Policy

When you save data, the type of datastore you use determines how the data gets saved.

For a store of type Sync, data is written to your local copy. In addition, the library maintains additional information in a "pending writes queue" to recognize that this data needs to be sent to the backend when you decide to sync or push.

For a store of type Auto, data is written to your local copy. Then an attempt is made to write this to the backend. If the write to the backend fails, the library maintains additional information in a "pending writes queue" to recognize that this data needs to be sent to the backend when you decide to sync or push.

For a store of type Cache, data is written first to your local copy and sent immediately to be written to the backend. If the write to the backend fails (e.g. because of network connectivity), the library maintains information to recognize that this data needs to be sent to the backend when connectivity becomes available again. Due to platform limitations, this does not happen automatically, but needs to be initiated from the user by calling the push() or sync() methods.

For a store of type Network, data is sent directly to the backend. If the write to the backend fails, the library does not persist the data for a future write.

The following example sets Network as a write policy. Set this way, this write policy will be used only for the save operation being requested, overriding the default Cache mode for the collection.

let book = Book()

let dataStore = try DataStore<Book>.collection()

let options = try Options(writePolicy: .forceNetwork)

dataStore.save(book, options: options) { (result: Result<Book, Swift.Error>) in

switch result {

case .success(let book):

print(book)

case .failure(let error):

print(error)

}

}Timeout

When performing any datastore operations, you can pass a timeout value as an option to stop the datastore operation after some amount of time if it hasn't already completed.

// an NSTimeInterval value in seconds, which means 2 minutes in this case

Kinvey.sharedClient.options = try Options(timeout: 120)The default timeout for the iOS library is 60 seconds.

Conflict Resolution

When using sync and cache stores, you need to be aware of situations where multiple users could be working on the same entity simultaneously offline. Consider the following scenario:

- User X edits entity A offline.

- User Y edits entity A offline.

- Network connectivity is restored for X, and A is synchronized with Kinvey.

- Network connectivity is restored for Y, and A is synchronized with Kinvey.

In the above scenario, the changes made by user X are overwritten by Y.

The libraries and backend implement a default mechanism of "client wins", which implies that the data in the backend reflects the last client that performed a write. Custom conflict management policies can be implemented with Business Logic.

Delta Sync

When your app handles large amounts of data, syncing entire collections can be expensive in terms of both bandwidth and speed, especially on slower networks. Rather than syncing the entire collection, fetching only new and updated entities can save bandwidth and improve your app's response times.

To help optimize fetching collection data, Kinvey implements Delta Sync, also known as data differencing. When an app performs a pull or find request for a collection that has the Delta Sync feature turned on, the library asks the backend only for those entities that have been created, modified, or deleted since the app last made that same request. This allows the backend to return only a small subset of data rather than the entire set of query results. The library then processes the data and updates its local cache appropriately.

Delta Sync requires the data store to be running in Auto, Cache, or Sync mode.

Calculating the delta is offloaded to the backend for better performance.

Limitations

Delta Sync can bring significant read performance improvements in most situations but you need to have the following limitations in mind:

- Delta Sync does not guarantee data consistency between the server and the client:

- If, on the server, you update an entity, changing the field on which you have previously queried the entity, the entity will not appear as updated in the data delta. This leaves a data discrepancy between the server and the client that you can rectify by making a full sync.

- If, on the server, you use permissions to deny the user read access to an entity that is already cached on the user device, the data delta will not return the entity as updated or deleted.

- External data coming from FlexData or RapidData is not supported.

- Delta Sync is not supported for the User and Files collections.

- If your collection has a Before Business Logic collection hook that calls

response.complete(), Delta Sync requests will not execute and the response from your hook will be returned. - If the request features skip or limit modifiers, the library does a normal Find or Pull and does not utilize Delta Sync.

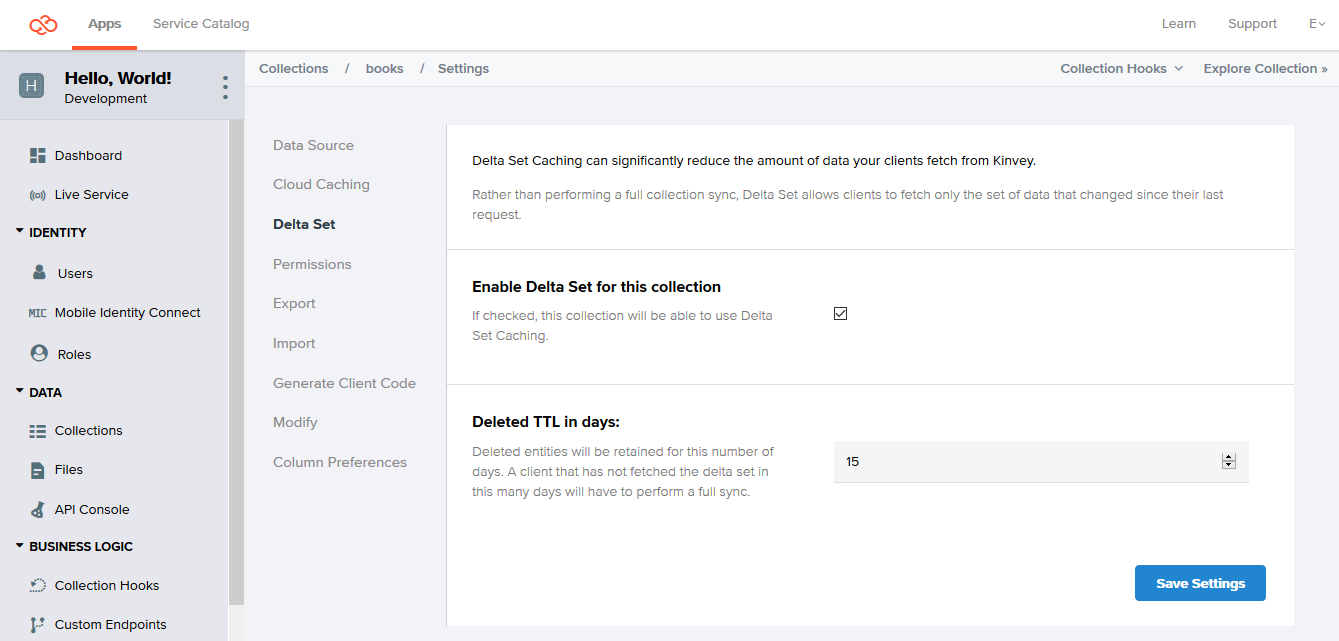

Configuring Delta Sync

The Delta Sync feature is configured per collection. The performance benefits of Delta Sync will be most noticeable on large collections that update infrequently. On the other hand, it may make sense to keep this feature turned off for small collections. This is because fetching the entire collection, if it's small, is expected to be faster than waiting for the server to calculate the delta and send it back.

Delta Sync is turned off by default for collections.

To turn on Delta Sync for a collection:

- Log in to the Console.

- Navigate to your app and select an environment to work with.

- Under Data, click Collections.

- On the collection card you want to configure, click the Settings icon.

- From the Settings menu, click Delta Set.

- Click Enable Delta Set for this collection.

- Optionally, change the default Deleted TTL in days value.

The Deleted TTL in days option specifies the change history, or the maximum period for which information about deleted collection entities is stored. This change history is required for building a delta. Delta Sync queries requesting changes that precede this period return an error. The library then automatically requests a full sync.

Keeping and returning information about deletions is important, because without it, when receiving the data on the client, you won't be able to determine why the entity is missing from the data delta: because it has been deleted or because it has stayed unchanged.

Turning off Delta Sync for a collection results in permanently removing all information about deleted entities from the server. If you turn on Delta Sync again for the collection at a later stage, the accumulation of information about deleted entities starts from the beginning.

Using Delta Sync

To use Delta Sync, you need to set a flag on the data store instance you are working with.

// Create a Sync data store with Delta Sync enabled

let dataStore = try DataStore<Book>.collection(.sync, options: Options(deltaSet: true))After that, data deltas are requested automatically by the library for this data store but only under certain conditions. The library only sends a delta request if all of the following requirements are met. Otherwise it performs a regular pull or find.

- Delta Sync is turned on for the underlying collection on the backend.

- The data store you are working with is in Cache or Sync mode.

- The request that you are making is cached, or in other words, it's not the first time you are making it.

- The request does not feature skip or limit modifiers unless it is the library doing autopaging.

On receiving the delta, the library takes care of deleting those local entities that the delta marked as deleted and creating or updating the respective new or modified entities from the delta.

find and pull operations. Instead of returning the full count of entities inside the collection, each operation returns the number of entities contained in the returned data delta.

Error Handling and Troubleshooting

The library makes using Delta Sync transparent to you, handling Delta Sync-related errors internally. In case you need to track errors linked to this feature, you can enable the library logging at debug level.

The library will still propagate any errors that are not specific to Delta Sync. Examples of such errors include network connectivity issues and authentication errors, as well as errors specific to Find and Pull requests made by the library in case the delta request has errored out.

Forced Network Requests

Even in Auto, Sync, and Cache data store modes, you can send out forced Network requests if you set the respective request-level option. Such requests will still get Delta Sync support because the local requests cache is already available on the device.

How it Works

Delta Sync builds on top of information about previous read requests kept in the local cache maintained in Auto, Cache, and Sync data store modes. For this reason, Delta Sync does not operate in Network mode.

To make Delta Sync possible, the backend stores records of deleted entities (a change history) for a configurable amount of time. Records are stored for each collection that has the Delta Sync option turned on.

When your app code sends a read request, the library checks the local cache to see if the request has been executed before. If it has, the library makes a requests for the data delta instead of executing the request directly.

On the backend, the server executes the query normally, but also uses the change history to determine which entities that had matched the query the previous time have been deleted. This way, the server can return information to help the library determine which entities to delete from the local cache.

The backend runs any Before or After Business Logic hooks that might be in place (see Limitations).

The server response contains a pair of arrays: one listing entities created or modified since the last execution time, and another listing entities deleted since that time.

Using the returned data, the library reconstructs the data on the server locally, taking the current state of the cache as a basis. It first deletes all entities listed in the deleted array, so that if any entity was deleted and then re-created with the same ID, it would not be lost. After that, the library caches any newly-created entities and updates existing ones, completing the process.

Additional Information

The Kinvey data store also comes with other features that are optional or have more limited applications.

Automatic Paging

Autopaging is an SDK feature that allows you to query your collection as normal and receive all results without worrying for the 10,000 entities count limit imposed by the backend.

If you expect queries to a collection to regularly return a result count that exceeds the backend limit, you may want to enable autopaging instead of using the limit and skip modifiers every time.

Autopaging works by automatically utilizing limit and skip on the background and storing all received pages in the offline cache. For that reason, autopaging does not work with data stores of type Network.

Autopaging only works with pulling. When you pull with autopaging enabled to refresh the local cache, the SDK reads and stores locally all entities in a collection or a subset of them if you pass a limiting query. It automatically uses paging if the entry count exceeds the backend-imposed limit.

To enable autopaging, set the autoPagination option for the collection you are reading:

let dataStore = try DataStore<Book>.collection(.sync, autoPagination: true)After you have all the needed entities persisted on the local device, you can call Find as normal. Depending on the store type, the operation is executed against the local store only or against both the local store and the backend. For executions against the local cache, the maximum result count as imposed by the backend does not apply.

Autopaging is subject to the follow caveats:

- Autopaging, similarly to manual paging, has the potential to miss new entities when such are written to the backend collection while a paged Pull is in progress. Each next page retrieval always works on the latest state of the collection which may change between the first and the last page retrieval.

- Enabling autopaging may have performance implications on your app depending on the collection size and the device performance. Fetching large amounts of data can be slow and working with it locally increases the memory and storage footprint on the device.

- When autopaging is enabled, any limit and skip modifiers on outgoing queries are ignored.

Docs

Docs